From version 0.14.0, OvenMediaEngine can encode same source with multiple bitrates renditions and deliver it to the player.

As shown in the example configuration below, you can provide ABR by adding <Playlists> to <OutputProfile>. There can be multiple playlists, and each playlist can be accessed with <FileName>.

The method to access the playlist set through LLHLS is as follows.

http[s]://<domain>[:port]/<app>/<stream>/<FileName>.m3u8

The method to access the playlist set through HLS is as follows.

http[s]://<domain>[:port]/<app>/<stream>/<FileName>.m3u8?format=ts

The method to access the Playlist set through WebRTC is as follows.

ws[s]://<domain>[:port]/<app>/<stream>/<FileName>

Note that <FileName> must never contain the playlist and chunklist keywords. This is a reserved word used inside the system.

To set up <Rendition>, you need to add <Name> to the elements of <Encodes>. Connect the set <Name> into <Rendition><Video> or <Rendition><Audio>.

In the example below, three quality renditions are provided and the URL to play the abr playlist as LLHLS is https://domain:port/app/stream/abr.m3u8 and The WebRTC playback URL is wss://domain:port/app/stream/abr

Even if you set up multiple codecs, there is a codec that matches each streaming protocol supported by OME, so it can automatically select and stream codecs that match the protocol. However, if you don't set a codec that matches the streaming protocol you want to use, it won't be streamed.

The following is a list of codecs that match each streaming protocol:

Therefore, you set it up as shown in the table. If you want to stream using LLHLS, you need to set up H.264, H.265 and AAC, and if you want to stream using WebRTC, you need to set up Opus.

Also, if you are going to use WebRTC on all platforms, you need to configure both VP8 and H.264. This is because different codecs are supported for each browser, for example, VP8 only, H264 only, or both.

However, don't worry. If you set the codecs correctly, OME automatically sends the stream of codecs requested by the browser.

WebRTC

VP8, H.264, Opus

LLHLS

H.264, H.265, AAC

<OutputProfile>

<Name>bypass_stream</Name>

<OutputStreamName>${OriginStreamName}</OutputStreamName>

<!--LLHLS URL : https://domain/app/stream/abr.m3u8 -->

<Playlist>

<Name>For LLHLS</Name>

<FileName>abr</FileName>

<Options> <!-- Optional -->

<!--

Automatically switch rendition in WebRTC ABR

[Default] : true

-->

<WebRtcAutoAbr>true</WebRtcAutoAbr>

<EnableTsPackaging>true</EnableTsPackaging>

</Options>

<Rendition>

<Name>Bypass</Name>

<Video>bypass_video</Video>

<Audio>bypass_audio</Audio>

</Rendition>

<Rendition>

<Name>FHD</Name>

<Video>video_1280</Video>

<Audio>bypass_audio</Audio>

</Rendition>

<Rendition>

<Name>HD</Name>

<Video>video_720</Video>

<Audio>bypass_audio</Audio>

</Rendition>

</Playlist>

<!--LLHLS URL : https://domain/app/stream/llhls.m3u8 -->

<Playlist>

<Name>Change Default</Name>

<FileName>llhls</FileName>

<Rendition>

<Name>HD</Name>

<Video>video_720</Video>

<Audio>bypass_audio</Audio>

</Rendition>

</Playlist>

<Encodes>

<Audio>

<Name>bypass_audio</Name>

<Bypass>true</Bypass>

</Audio>

<Video>

<Name>bypass_video</Name>

<Bypass>true</Bypass>

</Video>

<Audio>

<Codec>opus</Codec>

<Bitrate>128000</Bitrate>

<Samplerate>48000</Samplerate>

<Channel>2</Channel>

</Audio>

<Video>

<Name>video_1280</Name>

<Codec>h264</Codec>

<Bitrate>5024000</Bitrate>

<Framerate>30</Framerate>

<Width>1920</Width>

<Height>1280</Height>

<Preset>faster</Preset>

</Video>

<Video>

<Name>video_720</Name>

<Codec>h264</Codec>

<Bitrate>2024000</Bitrate>

<Framerate>30</Framerate>

<Width>1280</Width>

<Height>720</Height>

<Preset>faster</Preset>

</Video>

</Encodes>

</OutputProfile>OvenMediaEngine supports GPU-based hardware decoding and encoding. Currently supported GPU acceleration devices are Intel's QuickSync and NVIDIA. This article explains how to install the drivers for OvenMediaEngine and set up the configuration to use your GPU.

If you are using an NVIDIA graphics card, please refer to the following guide to install the driver. The OS that supports installation with the provided script are CentOS 7/8 and Ubuntu 18/20 versions. If you want to install the driver in another OS, please refer to the manual installation guide document.

CentOS environment requires the process of uninstalling the nouveau driver. After uninstalling the driver, the first reboot is required, and a new NVIDIA driver must be installed and rebooted. Therefore, two install scripts must be executed.

How to check driver installation

After the driver installation is complete, check whether the driver is operating normally with the nvidia-smi command.

If you have finished installing the driver to use the GPU, you need to reinstall the open source library using Prerequisites.sh . The purpose is to allow external libraries to use the installed graphics driver.

Please refer to the link for how to build and run.

To use hardware acceleration, set the HardwareAcceleration option to true under OutputProfiles. If this option is enabled, a hardware codec is automatically used when creating a stream, and if it is unavailable due to insufficient hardware resources, it is replaced with a software codec.

The codecs available using hardware accelerators in OvenMediaEngine are as shown in the table below. Different GPUs support different codecs. If the hardware codec is not available, you should check if your GPU device supports the codec.

D : Decoding, E : Encoding

NVIDIA NVDEC Video Format :

NVIDIA NVENV Video Format :

CUDA Toolkit Installation Guide :

NVIDIA Container Toolkit :

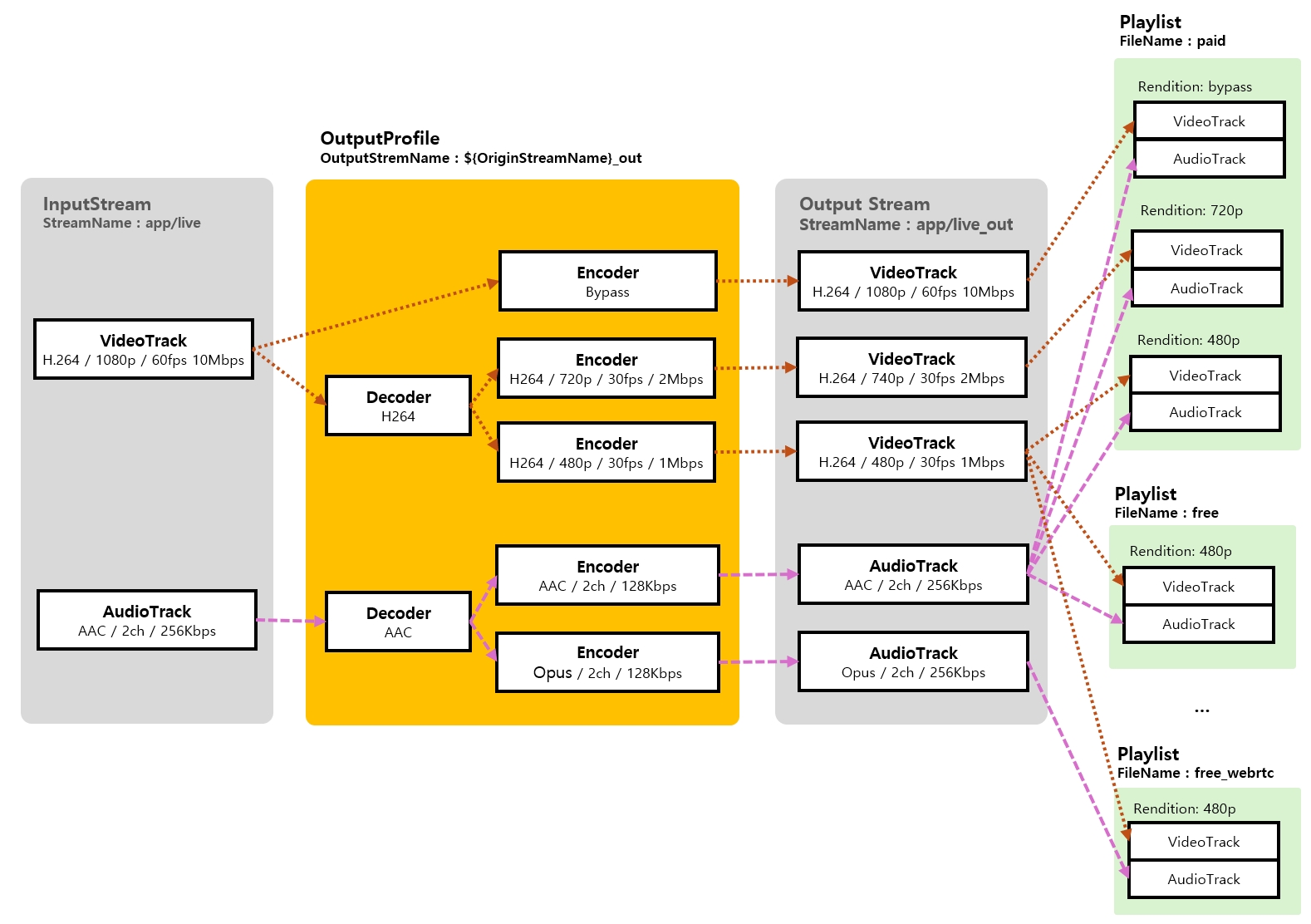

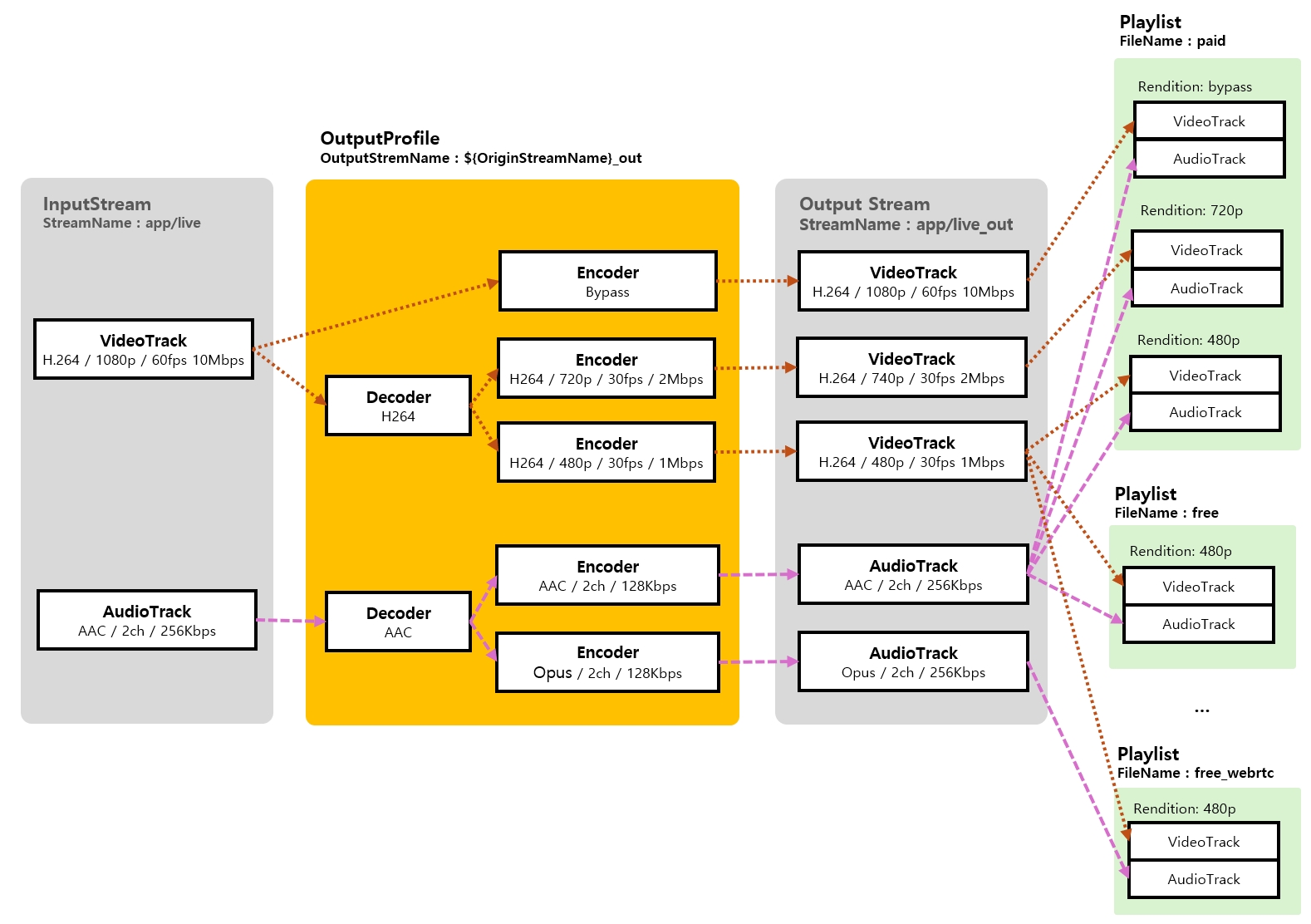

OvenMediaEngine supports Live Transcoding for Adaptive Bitrate(ABR) streaming and protocol compatibility. Each protocol supports different codecs, and ABR needs to change resolution and bitrate in different ways. Using OutputProfile, codecs, resolutions, and bitrates can be converted, and ABR can be configured as a variety of sets using a Playlist.

This document explains how to configure encoding settings, set up playlists.

Transcoding and Adaptive Streaming Architecture

This section explains how to define output streams, change the codec, bitrate, resolution, frame rate, sample rate, and channels for video/audio, as well as how to use the bypass method.

This section explains how to use a Playlist to assemble ABR streams by selecting tracks encoded in various qualities.

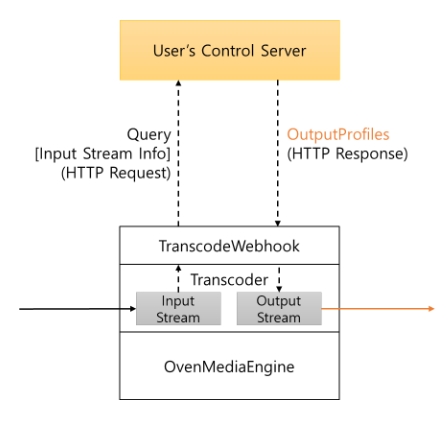

The transcoding webhook feature is used when dynamic changes to encoding and ABR configuration are needed based on the type or quality of the input stream.

These are the types of supported decoding and encoding codecs.

Video

VP8, H.264, H.265

Audio

AAC, Opus, MP3

Video

These are the types of hardware accelerators officially supported.

NVIDIA GPU

Xilinx Alveo U30 MA enterpise only

NILOGAN experiment

Quick Sync Video deprecated

The <OutputProfile> setting allows incoming streams to be re-encoded via the <Encodes> setting to create a new output stream. The name of the new output stream is determined by the rules set in <OutputStreamName>, and the newly created stream can be used according to the streaming URL format.

According to the above setting, if the incoming stream name is stream, the output stream becomes stream_bypass

A Docker Image build script that supports NVIDIA GPU is provided separately. Please refer to the previous guide for how to build

If you have finished installing the driver to use the VPU, you need to reinstall the open source library using Prerequisites.sh . The purpose is to allow external libraries to use the installed graphics driver. You also have to unzip the ffmpeg patch provide by netint in a specfic path

-

Docker on NVIDIA Container Toolkit

D / E

D / E

-

-

Xilinx U30MA

D / E

D / E

Quick Sync Video format support: https://en.wikipedia.org/wiki/Intel_Quick_Sync_Video

Xilinx Video SDK : https://xilinx.github.io/video-sdk/v3.0/index.html

QuickSync

D / E

D / E

-

-

NVIDIA

D / E

D / E

(curl -LOJ https://github.com/AirenSoft/OvenMediaEngine/archive/master.tar.gz && tar xvfz OvenMediaEngine-master.tar.gz)

OvenMediaEngine-master/misc/install_nvidia_driver.sh$ nvidia-smi

Thu Jun 17 10:20:23 2021

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 465.19.01 Driver Version: 465.19.01 CUDA Version: 11.3 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 NVIDIA GeForce ... Off | 00000000:01:00.0 Off | N/A |

| 20% 35C P8 N/A / 75W | 156MiB / 1997MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+-

OvenMediaEngine-master/misc/prerequisites.sh --enable-nvcldconfig -p | grep libxcoder_logan.sodocker run -d ... --gpus all airensoft/ovenmediaengine:dev<OutputProfiles>

<HWAccels>

<!--

Setting for Hardware Modules.

- nv : Nvidia Video Codec SDK

- xma :Xilinx Media Accelerator

- qsv :Intel Quick Sync Video

- nilogan: Netint VPU

You can use multiple modules by separating them with commas.

For example, if you want to use xma and nv, you can set it as follows.

<Modules>[ModuleName]:[DeviceId],[ModuleName]:[DeviceId],...</Modules>

<Modules>xma:0,nv:0</Modules>

-->

<Decoder>

<Enable>true</Enable>

<Modules>nv</Modules>

</Decoder>

<Encoder>

<Enable>true</Enable>

<Modules>nv</Modules>

</Encoder>

</HWAccels>

<OutputProfile>

...

</OutputProfile>

</OutputProfiles>OvenMediaEngine-master/misc/install_nvidia_docker_container.shOvenMediaEngine-master/Dockerfile.cuda

OvenMediaEngine-master/Dockerfile.cuda.local./prerequisites.sh --enable-nilogan --nilogan-path=/root/T4xx/release/FFmpeg-n5.0_t4xx_patchVP8, H.264, H.265

Audio

AAC, Opus

Image

Jpeg, Png, WebP

WebRTC ws://192.168.0.1:3333/app/stream_bypass

LLHLS http://192.168.0.1:8080/app/stream_bypass/llhls.m3u8

HLS http://192.168.0.1:8080/app/stream_bypass/ts:playlist.m3u8

You can set the video profile as below:

Codec*

Type of codec to be encoded See the table below

Bitrate*

Bit per second

Name*

Encode name for Renditions No duplicates allowed

Width

Width of resolution

Height

Height of resolution

Framerate

Frames per second

* required

Video

VP8

vp8

SW: libvpx*

H.264

h264

SW: openh264*, x264

HW: nv, xma

H.265 (Hardware Only)

h265

A table in which presets provided for each codec library are mapped to OvenMediaEngine presets. Slow presets are of good quality and use a lot of resources, whereas Fast presets have lower quality and better performance. It can be set according to your own system environment and service purpose.

slower

QP( 10-39)

p7

best

slow

QP (16-45)

p6

best

medium

QP (24-51)

p5

References

https://trac.ffmpeg.org/wiki/Encode/VP8

https://docs.nvidia.com/video-technologies/video-codec-sdk/nvenc-preset-migration-guide/

You can set the audio profile as below:

Codec*

Type of codec to be encoded See the table below

Bitrate*

Bits per second

Name*

Encode name for Renditions No duplicates allowed

Samplerate

Samples per second

Channel

The number of audio channels

Modules

An encoder library can be specified; otherwise, the default codec See the table below

* required

It is possible to have an audio only output profile by specifying the Audio profile and omitting a Video one.

Audio

AAC

aac

SW: fdkaac*

Opus

opus

SW: libopus*

You can configure Video and Audio to bypass transcoding as follows:

You need to consider codec compatibility with some browsers. For example, chrome only supports OPUS codec for audio to play WebRTC stream. If you set to bypass incoming audio, it can't play on chrome.

WebRTC doesn't support AAC, so if video bypasses transcoding, audio must be encoded in OPUS.

If the codec or quality of the input stream is the same as the profile to be encoded into the output stream. there is no need to perform re-transcoding while unnecessarily consuming a lot of system resources. If the quality of the input track matches all the conditions of BypassIfMatch, it will be Pass-through without encoding

Codec (Optional)

eq

Compare video codecs

Width (Optional)

eq, lte, gte

Compare horizontal pixel of video resolution

Height (Optional)

eq, lte, gte

Compare vertical pixel of video resolution

SAR (Optional)

eq

* eq: equal to / lte: less than or equal to / gte: greater than or equal to

Codec (Optional)

eq

Compare audio codecs

Samplerate (Optional)

eq, lte, gte

Compare sampling rate of audio

Channel (Optional)

eq, lte, gte

Compare number of channels in audio

* eq: equal to / lte: less than or equal to / gte: greater than or equal to

To support WebRTC and LLHLS, AAC and Opus codecs must be supported at the same time. Use the settings below to reduce unnecessary audio encoding.

If a video track with a lower quality than the encoding option is input, unnecessary upscaling can be prevented. SAR (Storage Aspect Ratio) is the ratio of original pixels. In the example below, even if the width and height of the original video are smaller than or equal to the width and height set in the encoding option, if the ratio is different, it means that encoding is performed without bypassing.

If you want to transcode with the same quality as the original. See the sample below for possible parameters that OME supports to keep original. If you remove the Width, Height, Framerate, Samplerate, and Channel parameters. then, It is transcoded with the same options as the original.

To change the video resolution when transcoding, use the values of width and height in the Video encode option. If you don't know the resolution of the original, it will be difficult to keep the aspect ratio after transcoding. Please use the following methods to solve these problems. For example, if you input only the Width value in the Video encoding option, the Height value is automatically generated according to the ratio of the original video.

The software decoder uses 2 threads by default. If the CPU speed is too low for decoding, increasing the thread count can improve performance.

<OutputProfiles>

<!--

Common setting for decoders. Decodes is optional.

<Decodes>

Number of threads for the decoder.

<ThreadCount>2</ThreadCount>

By default, OME decodes all video frames. If OnlyKeyframes is true, only the keyframes will be decoded, massively improving thumbnail performance at the cost of having less control over when exactly they are generated

<OnlyKeyframes>false</OnlyKeyframes>

</Decodes>

-->

<OutputProfile>

<Name>bypass_stream</Name>

<OutputStreamName>${OriginStreamName}_bypass</OutputStreamName>

<Encodes>

<Video>

<Bypass>true</Bypass>

</Video>

<Audio>

<Name>aac_audio</Name>

<Codec>aac</Codec>

<Bitrate>128000</Bitrate>

<Samplerate>48000</Samplerate>

<Channel>2</Channel>

<BypassIfMatch>

<Codec>eq</Codec>

</BypassIfMatch>

</Audio>

<Audio>

<Name>opus_audio</Name>

<Codec>opus</Codec>

<Bitrate>128000</Bitrate>

<Samplerate>48000</Samplerate>

<Channel>2</Channel>

<BypassIfMatch>

<Codec>eq</Codec>

</BypassIfMatch>

</Audio>

</Encodes>

</OutputProfile>

</OutputProfiles><Encodes>

<Video>

<Name>h264_hd</Name>

<Codec>h264</Codec>

<Width>1280</Width>

<Height>720</Height>

<Bitrate>2000000</Bitrate>

<Framerate>30.0</Framerate>

<KeyFrameInterval>30</KeyFrameInterval>

<BFrames>0</BFrames>

<!--

<Preset>fast</Preset>

<ThreadCount>4</ThreadCount>

<Lookahead>5</Lookahead>

<Modules>x264</Modules>

-->

</Video>

</Encodes><Encodes>

<Audio>

<Name>opus_128</Name>

<Codec>opus</Codec>

<Bitrate>128000</Bitrate>

<Samplerate>48000</Samplerate>

<Channel>2</Channel>

</Audio>

</Encodes><Video>

<Bypass>true</Bypass>

</Video>

<Audio>

<Bypass>true</Bypass>

</Audio><Encodes>

<Video>

<Bypass>true</Bypass>

</Video>

<Audio>

<Codec>opus</Codec>

<Bitrate>128000</Bitrate>

<Samplerate>48000</Samplerate>

<Channel>2</Channel>

</Audio>

</Encodes><Encodes>

<Video>

<Bypass>true</Bypass>

</Video>

<Audio>

<Name>cond_audio_aac</Name>

<Codec>aac</Codec>

<Bitrate>128000</Bitrate>

<Samplerate>48000</Samplerate>

<Channel>2</Channel>

<BypassIfMatch>

<Codec>eq</Codec>

<Samplerate>lte</Samplerate>

<Channel>eq</Channel>

</BypassIfMatch>

</Audio>

<Audio>

<Name>cond_audio_opus</Name>

<Codec>opus</Codec>

<Bitrate>128000</Bitrate>

<Samplerate>48000</Samplerate>

<Channel>2</Channel>

<BypassIfMatch>

<Codec>eq</Codec>

<Samplerate>lte</Samplerate>

<Channel>eq</Channel>

</BypassIfMatch>

</Audio>

</Encodes><Encodes>

<Video>

<Name>prevent_upscaling_video</Name>

<Codec>h264</Codec>

<Bitrate>2048000</Bitrate>

<Width>1280</Width>

<Height>720</Height>

<Framerate>30</Framerate>

<BypassIfMatch>

<Codec>eq</Codec>

<Width>lte</Width>

<Height>lte</Height>

<SAR>eq</SAR>

</BypassIfMatch>

</Video>

</Encodes><Encodes>

<Video>

<Codec>vp8</Codec>

<Bitrate>2000000</Bitrate>

</Video>

<Audio>

<Codec>opus</Codec>

<Bitrate>128000</Bitrate>

</Audio>

</Encodes><Encodes>

<Video>

<Codec>h264</Codec>

<Bitrate>2000000</Bitrate>

<Width>1280</Width>

<!-- Height is automatically calculated as the original video ratio -->

<Framerate>30.0</Framerate>

</Video>

<Video>

<Codec>h264</Codec>

<Bitrate>2000000</Bitrate>

<!-- Width is automatically calculated as the original video ratio -->

<Height>720</Height>

<Framerate>30.0</Framerate>

</Video>

</Encodes><OutputProfiles>

<!--

Common setting for decoders. Decodes is optional.

-->

<Decodes>

<!-- Number of threads for the decoder.-->

<ThreadCount>2</ThreadCount>

</Decodes>

<OutputProfile>

....

</OutputProfile>

</OutputProfiles>KeyFrameInterval

Number of frames between two keyframes (0~600) default is framerate (i.e. 1 second)

BFrames

Number of B-frames (0~16) default is 0

Profile

H264 only encoding profile (baseline, main, high)

Preset

Presets of encoding quality and performance See the table below

ThreadCount

Number of threads in encoding

Lookahead

Number of frames to look ahead default is 0 x264 is 0-250

nvenc is 0-31 xma is 0-20

Modules

An encoder library can be specified; otherwise, the default codec See the table below

HW: nv, xma

good

fast

QP (32-51)

p4

realtime

faster

QP (40-51)

p3

realtime

Compare ratio of video resolution

TranscodeWebhook allows OvenMediaEngine to use OutputProfiles from the Control Server's response instead of the OutputProfiles in the local configuration (Server.xml). OvenMediaEngine requests OutputProfiles from the Control Server when streams are created, enabling the specification of different profiles for each individual stream.

\

Enable (required) You can enable or disable TranscodeWebhook settings.

ControlServerUrl (required) It's the URL of the Control Server, and it supports both HTTP and HTTPS.

SecretKey (optional) This is the Secret Key used to pass authentication for the Control Server. To pass security authentication, an HMAC-SHA1 encrypted value of the HTTP Payload is added to the HTTP Header's X-OME-Signature. This Key is used for generating this value.

Timeout (optional, default: 1500) This is the Timeout value used when connecting to the Control Server.

UseLocalProfilesOnConnectionFailure(optional, default: true) This determines whether to use the OutputProfiles from Local settings in case of communication failure with the Control Server. If it's set to "false," a communication failure with the Control Server will result in a failure to create the Output stream.

UseLocalProfilesOnServerDisallow (optional, default: false) When the Control Server responds with a 200 OK, but "allowed" is set to "false," this policy is followed.

UseLocalProfilesOnErrorResponse (optional, default: false) When the Control Server responds with error status codes such as 400 Bad Request, 404 Not Found, 500 Internal Error, OvenMediaEngine follows this policy.

OvenMediaEngine sends requests to the Control Server in the following format.

The Control Server responds in the following format to specify OutputProfiles for the respective stream.

The outputProfiles section in the JSON structure mirrors the configuration in Server.xml and allows for detailed settings as shown below:

<Applications>

<Application>

<Name>app</Name>

<!-- Application type (live/vod) -->

<Type>live</Type>

<TranscodeWebhook>

<Enable>true</Enable>

<ControlServerUrl>http://example.com/webhook</ControlServerUrl>

<SecretKey>abc123!@#</SecretKey>

<Timeout>1500</Timeout>

<UseLocalProfilesOnConnectionFailure>true</UseLocalProfilesOnConnectionFailure>

<UseLocalProfilesOnServerDisallow>false</UseLocalProfilesOnServerDisallow>

<UseLocalProfilesOnErrorResponse>false</UseLocalProfilesOnErrorResponse>

</TranscodeWebhook>POST /configured/target/url/ HTTP/1.1

Content-Length: 1482

Content-Type: application/json

Accept: application/json

X-OME-Signature: f871jd991jj1929jsjd91pqa0amm1

{

"source": "TCP://192.168.0.220:2216",

"stream": {

"name": "stream",

"virtualHost": "default",

"application": "app",

"sourceType": "Rtmp",

"sourceUrl": "TCP://192.168.0.220:2216",

"createdTime": "2025-06-05T14:43:54.001+09:00",

"tracks": [

{

"id": 0,

"name": "Video",

"type": "Video",

"video": {

"bitrate": 10000000,

"bitrateAvg": 0,

"bitrateConf": 10000000,

"bitrateLatest": 21845,

"bypass": false,

"codec": "H264",

"deltaFramesSinceLastKeyFrame": 0,

"framerate": 30.0,

"framerateAvg": 0.0,

"framerateConf": 30.0,

"framerateLatest": 0.0,

"hasBframes": false,

"width": 1280,

"height": 720,

"keyFrameInterval": 1.0,

"keyFrameIntervalAvg": 1.0,

"keyFrameIntervalConf": 0.0,

"keyFrameIntervalLatest": 0.0

}

},

{

"id": 1,

"name": "Audio",

"type": "Audio",

"audio": {

"bitrate": 160000,

"bitrateAvg": 0,

"bitrateConf": 160000,

"bitrateLatest": 21845,

"bypass": false,

"channel": 2,

"codec": "AAC",

"samplerate": 48000

}

},

{

"id": 2,

"name": "Data",

"type": "Data"

}

]

}

}HTTP/1.1 200 OK

Content-Length: 886

Content-Type: application/json

Connection: Closed

{

"allowed": true,

"reason": "it will be output to the log file when `allowed` is false",

"outputProfiles": {

"outputProfile": [

{

"name": "bypass",

"outputStreamName": "${OriginStreamName}",

"encodes": {

"videos": [

{

"name": "bypass_video",

"bypass": "true"

}

],

"audios": [

{

"name": "bypass_audio",

"bypass": true

}

]

},

"playlists": [

{

"fileName": "default",

"name": "default",

"renditions": [

{

"name": "bypass",

"video": "bypass_video",

"audio": "bypass_audio"

}

]

}

]

}

]

}

}"outputProfiles": {

"hwaccels": {

"decoder": {

"enable": false

},

"encoder": {

"enable": false

}

},

"decodes": {

"threadCount": 2,

"onlyKeyframes": false

},

"outputProfile": [

{

"name": "bypass",

"outputStreamName": "${OriginStreamName}",

"encodes": {

"videos": [

{

"name": "bypass_video",

"bypass": "true"

},

{

"name": "video_h264_1080p",

"codec": "h264",

"width": 1920,

"height": 1080,

"bitrate": 5024000,

"framerate": 30,

"keyFrameInterval": 60,

"bFrames": 0,

"preset": "faster"

},

{

"name": "video_h264_720p",

"codec": "h264",

"width": 1280,

"height": 720,

"bitrate": 2024000,

"framerate": 30,

"keyFrameInterval": 60,

"bFrames": 0,

"preset": "faster"

}

],

"audios": [

{

"name": "aac_audio",

"codec": "aac",

"bitrate": 128000,

"samplerate": 48000,

"channel": 2,

"bypassIfMatch": {

"codec": "eq"

}

},

{

"name": "opus_audio",

"codec": "opus",

"bitrate": 128000,

"samplerate": 48000,

"channel": 2,

"bypassIfMatch": {

"codec": "eq"

}

}

],

"images": [

{

"codec": "jpeg",

"framerate": 1,

"width": 320,

"height": 180

}

]

},

"playlists": [

{

"fileName": "abr",

"name": "abr",

"options": {

"enableTsPackaging": true,

"webRtcAutoAbr": true,

"hlsChunklistPathDepth": -1

},

"renditions": [

{

"name": "1080p_aac",

"video": "video_h264_1080p",

"audio": "aac_audio"

},

{

"name": "720p_aac",

"video": "video_h264_720p",

"audio": "aac_audio"

},

{

"name": "1080p_opus",

"video": "video_h264_1080p",

"audio": "opus_audio"

},

{

"name": "720p_opus",

"video": "video_h264_720p",

"audio": "opus_audio"

}

]

}

]

}

]

}